The compounding impact of Artificial Intelligence and Robotics on the future of learning: Focus on RobotGPTs

Report Brief

The Compounding Impact of Artificial Intelligence and Robotics on the Future of Learning

Focus on RobotGPTs

Can robots powered by Artificial Intelligence (AI) accelerate outreach and impact of capacity building activities aimed at social development?

The International Training Centre of the International Labour Organization (ITCILO) provides a safe space for experimentation with innovative capacity development solutions that harness emerging technologies to promote social justice through decent work, including AI powered robots to boost learning and collaboration. This digital brief captures selected findings about action research on the near future feasibility of deploying these RobotGPTs.

Rethinking learning and capacity development with RobotGPTs

Recent technological breakthroughs in AI have given the robots’ development new impetus. In a nutshell, thanks to Large Language Models like ChatGPT, the real-time speech interaction between robots and humans during learning and collaboration activities has moved from science fiction to near future feasibility – opening the door to a new paradigm in in education.

What are RobotGPTs and what is the difference between a RobotGPT and a Cobot?

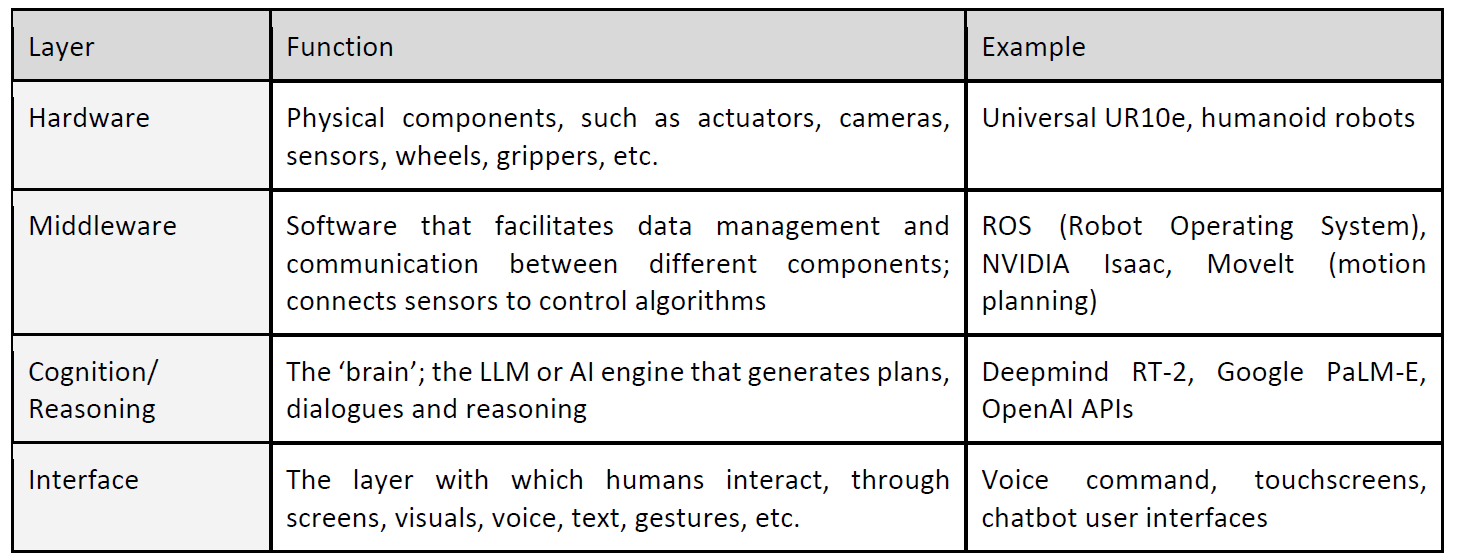

The term RobotGPTs refers to robotic systems whose high-level intelligence is driven by Large Language Models (LLMs). These systems combine multiple layers:

Collaborative robots or short Cobots are robots designed to work alongside humans. Cobots are typically predictable, sociable, adaptable, safe, and intuitive. They are already selectively used in manufacturing, warehousing, healthcare, hospitality, and research. Robot GPTs extend Cobots by adding conversational intelligence and high-level planning. Cobots are furthermore typically action-specific; designed to perform a certain set of functions only, and therefore generally not humanoid.

Could RobotGPTs become important for providers of adult learning services?

Two proxy indicators to track the dynamics of developments in a given technology domain are investments and patents. Fact is that large technology companies and well-funded start-ups are investing significantly in general-purpose humanoid robots and that patent applications have fast increased over the last ten years. Most recently, the number of patent applications has come down but presumably not due to a wane in interest but because technology starts maturing, with a shift of focus towards product quality and commercialization rather than patent quantity.

Potential uses of robot GPTs in adult learning include:

- Translation and interpretation. Robots acting as interpreters during training sessions, providing real-time translation and cultural context. This is particularly relevant for ITCILO’s multilingual audience.

- Contextualized instruction. LLM integration allows robots to provide detailed explanations, answer questions on the fly, and adapt instructions to learners’ prior knowledge. In training, robots could refer to regulations and adjust complexity.

- Predictive modelling and simulation. Robots could run safety drills or predictive scenarios in training labs, using LLMs to describe underlying physics or labour-law implications.

- Facilitating group work. Robots may mediate group discussions, ask probing questions, and ensure inclusive participation.

- Scalability and remote learning. Robots could facilitate blended or remote sessions by acting as local proxies for instructors, streaming content, and interacting physically with equipment on behalf of remote participants. Combined with XR, robots could create more immersive experiences.

- Data-driven feedback and kinesthetic learning. Sensors could record learners’ interactions, enabling analysis of performance and personalized feedback. Ethical data governance is required to protect privacy. By physically demonstrating tasks and allowing learners to imitate, robots can enhance muscle memory and motor skill acquisition.

Positioning RobotGPTs at the intersection between AI and Robotics

Advanced robots can be categorized by mobility, morphology, and autonomy:

- Industrial robots. Fixed robots used in manufacturing or warehousing for repetitive tasks. Cobots add sensors and software to safely interact with humans. ISO 10218-2:2025 outlines safety requirements for such installations.

- Service and social robots. Mobile robots used in healthcare, hospitality and domestic contexts. Examples include Pepper and Nao (developed by SoftBank Robotics), interactive humanoids used for reception and language teaching.

- Humanoid general-purpose robots. Robots designed to imitate human form and dexterity. Tesla’s Optimus, Sanctuary AI’s Phoenix, Figure’s Figure 01, and 1X’s Neo represent this emerging category. They use LLM-based cognition to perform natural language tasks and physical manipulation.

- Embodied AI agents. Agents that combine LLMs with multimodal perception (vision, audio, tactile) and reinforcement learning. Models like PaLM-E and RT-2 fit here. These agents generalize across tasks and environments.

LLMs are the ‘cognitive core’ that makes these robots generative and adaptive. LLMs are advanced neural networks trained on massive textual data sets to predict the next word and generate fitting prose. Their training equips them with a form of semantic knowledge and the ability to reason in language, and when integrated with robotics they can interpret natural-language instructions and formulate high-level plans. However, LLMs on their own do not have a built-in understanding of the physical world: they have no visual of objects or a sensation of their weight, and they have not experienced spatial constraints. In purely text-based form, an LLM cannot know what commands as basic as “grasp the bottle” mean in terms of joint trajectories or tactile feedback. Attempts to connect a chatbot to a robot can yield plausible but ungrounded commands, resulting in unrealistic or unsafe plans. To make language models useful for embodied agents, they must be coupled with sensory data, control policies, and planning frameworks.

The following approaches illustrate different attempts to ground language in perception and action:

- SayCan. This 2022 approach utilizes an LLM (originally PaLM) to generate high-level skills while a value function estimates their feasibility. Combining both yields sequences that are both useful and possible, reducing errors compared to earlier models.

- PaLM-E. Announced by Google in 2023, PaLM-E feeds raw robot sensor data into a PaLM-like language model, enabling the model to perform both language and vision tasks and transfer knowledge across modalities.

- RT-2 (Robotics Transformer 2). Google DeepMind’s 2023/2024 model finetunes a pre-trained vision-language model with robot data by representing actions as textual tokens.

- It can interpret unseen commands, reason about objects and reuse knowledge to select improvised tools.

- Gemini Robotics and Gemini Robotics-ER. Announced in March 2025, these models integrate vision, language, and action to enable robots to handle objects and perform delicate manipulations like fold origami. The embodied reasoning (ER) variant provides spatial understanding and planning.

- RoboGPT and RoboPlanner. The 2024 RoboGPT framework divides tasks into sub-goals using a planning module trained on 67K planning examples, a skills module, and a re-planning module that shows improved performance on long-horizon tasks.

- ELLMER (Embodied LLM with Multimodal Example Retrieval). A 2025 study uses GPT-4 with a retrieval-augmented generator to generate action plans conditioned on vision and force feedback. The system enabled a robot to make coffee and decorate plates in unpredictable conditions.

Will RobotGPTs become ‘real’ any time soon? Selected research findings

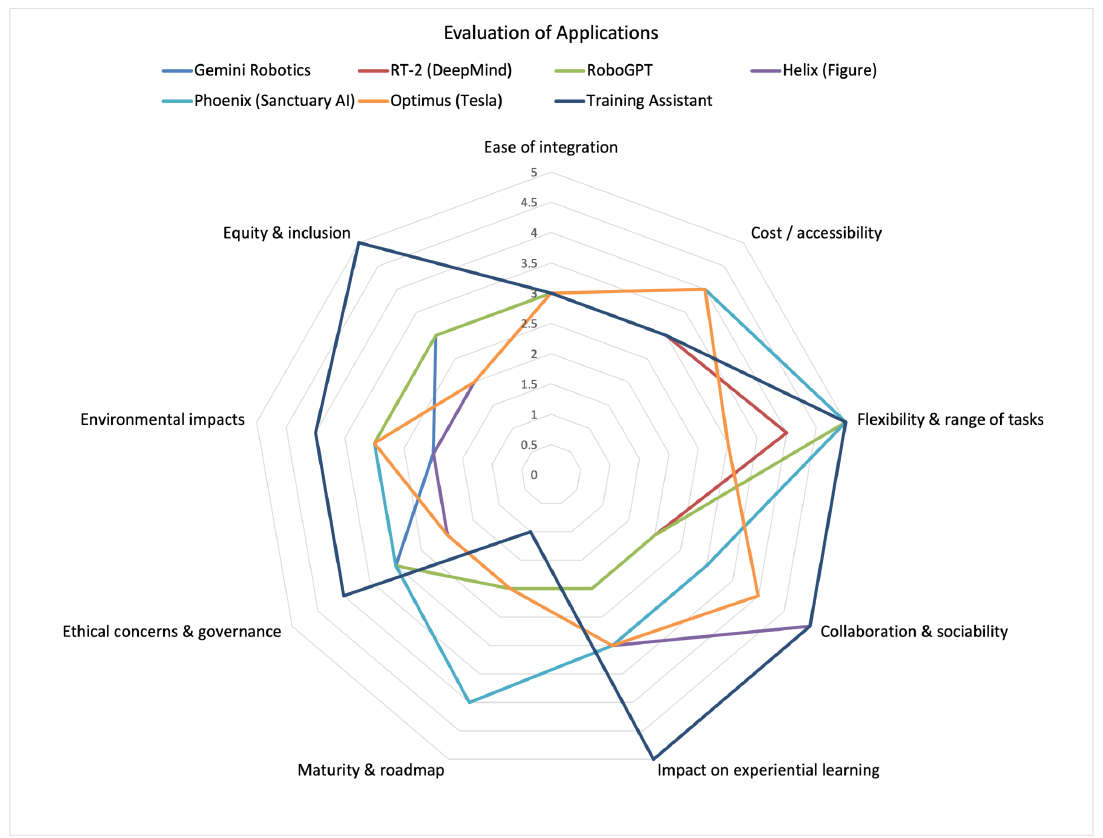

ITCILO puts strong emphasis on the ‘action’ in its action research activities, with the explicit objective of feeding the findings into product pilots and sandbox experiments. For this reason, the action research on the future of learning with RobotGPTs emphasized on the near future up to 2030, which can be considered the first horizon for significant experimentation and pilot deployment. The research focused accordingly on assessing selected collaborative robot applications using LLMs that are either already available as prototypes or close to deployment. These applications were assessed through the lens of an adult learning service provider outside the formal education system, and vis-à-vis nine criteria: (ease of) integration into learning service cycles, cost, flexibility, collaboration potential, learning impact, maturity, ethical issues, environmental footprint, and equity.

Multi-criteria evaluation of RobotGPT applications (qualitative estimates)

Several common patterns and considerations emerge when comparing the competing systems mal across these evaluation criteria:

- Flexibility is strong across most systems. Models like Gemini Robotics and Helix demonstrate generalization to diverse tasks, and emerging research frameworks emphasize long-horizon planning. This suggests that versatility is quickly becoming a baseline expectation for AI-powered robots.

- Cost and accessibility remain major barriers. All commercial prototypes are assessed as high-cost and therefore out of reach for most users. Adoption is therefore limited to well-resourced institutions, however novel financing models, as well as continuous development of technologies is likely to lower the piercing barriers within the next few years.

- Sociability and human-interaction vary widely. Helix and Optimus are designed with natural language interfaces and human-like interaction in mind. In contrast, RT-2 and RoboGPT focus on navigation and manipulation, offering little in the way of social engagement. This underscores a critical bifurcation between industrial tools and general-purpose service robots.

- Maturity levels are low. Most systems are prototypes or early-generation models. Even Sanctuary AI’s seventh-generation Phoenix is described as moving toward commercial readiness with only pilot deployments thus far. Few producers have clear roadmaps to widespread deployment by 2030, indicating that the market is still several years from maturity.

- Environmental impact. Manufacturing and operating robots consume energy and resources. Sanctuary AI’s use of miniaturized hydraulics reduces power consumption, but large robots still have significant carbon footprints.

- Ethical and governance issues are pervasive. Across the board, concerns include potential job displacement, user privacy, and safety in physical interaction. LLM‑powered robots generate content based on training data. Protecting intellectual property rights and ensuring accurate attribution is critical in educational contexts. ILO’s 2025 research brief Work Transformed states that “The potential for discrimination embedded in algorithmic decision-making raises ethical concerns, and over-reliance on automation can lead to skill degradation and new forms of inequality”. Additionally, most systems still lack clear sustainability strategies. The ILO emphasizes that “Trust in AI will not emerge from technology alone—it must be earned through inclusive policymaking, ethical design, and institutional safeguards”.

Taken together, these trends generally suggest that while the technology is advancing quickly in terms of task flexibility and human-machine interaction, significant gaps remain in affordability, maturity, governance, and sustainability.

Conclusions

RobotGPTs are an intriguing yet immature class of technologies. By combining large‑language‑model cognition with advanced robotics, they offer natural language interfaces, planning and reasoning, and embodied interaction. Prototypes such as Gemini Robotics, Helix, Phoenix, and Optimus demonstrate rapid advances in dexterity and collaboration. However, costs remain prohibitive, and most models are still in early stages of development. Safety, reliability, and ethical concerns further limit their readiness for widespread deployment. Yet the long‑term potential is significant: as AI and robotics continue to converge, costs will fall and capabilities will grow, creating opportunities to enhance adult learning through demonstration, simulation, translation, and facilitation.

Recommendations

Strategic Positioning

1. Focus on complementarity. RobotGPTs should complement, not replace, human trainers. Use cases could include demonstration of complex research tasks, translation, and real-time feedback. Prioritize applications that address accessibility and inclusion, such as language interpretation or regulatory clarification.

2. Human-oriented by design. Commit to human‑centred design that involves stakeholders in co‑creation, mapping social contexts, measuring impacts, and managing risks.

3. Promote interoperable ecosystems. Encouraging the use of open‑source software and standard interfaces can avoid vendor lock‑in.

Capacity development initiative proposals

1. Launch RobotGPT Foresight Labs: Partner with universities and technology hubs to create immersive foresight studios combining VR, digital twins, and LLM‑enabled robots. These labs would let stakeholders “time‑travel” into possible futures and design policies that anticipate technological breakthroughs before they hit the mainstream.

2. AI‑Powered Peer Mentoring: Pair learners with “robot mentors” programmed using open LLMs and local labour laws. These robots would facilitate peer learning, moderate group simulations, and help participants practice complex negotiation or safety scenarios.

3. Campus Pilot of a Test Humanoid RobotGPT: ITCILO could pilot a humanoid RobotGPT on campus as a live prototype to assist with training activities, particularly in language support, research assistance and teamwork facilitation. The robot would integrate a multilingual large language model with vision and sensor data—similar to systems like PaLM‑E, enabling it to translate between languages, answer participant queries by retrieving and summarizing relevant materials, and moderate group exercises. By evaluating how learners and trainers interact with the prototype in real training scenarios, ITCILO can generate evidence‑based insights on the potential and limitations of RobotGPTs

For the long read of the action research findings and a list of reference documents