Evaluate: Monitoring and Evaluation

Evaluate

Monitoring and Evaluation

- What for?

- Tools/ Kirkpatrick Model

- Tools/ After action review

1. What for?

Continuous monitoring and evaluation indicate if we are on the right track for achieving the learning results we want from the learning process. They offer answers to the following questions: Are we implementing what we have designed? Are the participants responding as we have provisioned? Are we on our way to achieving the learning objectives?

Monitoring and evaluation involve a continuous process of data collection on relevant aspects for the learning process. We need to do data analysis constantly during the training, not just after, this is in order to track progress toward achieving the learning objectives and expected training results, and to ensure any necessary adjustments and adaptations are implemented.

2. Tools/ Kirkpatrick Model

References

Donald L Kirkpatrick's training evaluation model - the four levels of learning evaluation,

Businessballs: https://www.businessballs.com/facilitation-workshops-and-training/kirkpatrick-evaluation-method/ www.kirkpatrickpartners.com

An Introduction to the New World Kirkpatrick® Model, Jim Kirkpatrick, Ph.D. Wendy Kirkpatrick, http://www.kirkpatrickpartners.com/Portals/0/Resources/White%20Papers/Introduction%20to%20the%20Kirkpatrick%20New%20World%20Model.pdf

The New World Level 1 Reaction Sheets, Jim Kirkpatrick, PhD, www.kirkpatrickpartners.com

https://www.kirkpatrickpartners.com/Portals/0/Storage/The%20new%20world%20level%201%20reaction%20sheets.pdf

New World Level 2: The Importance of Learner Confidence and Commitment, Jim Kirkpatrick, PhD and Wendy Kayser Kirkpatrick, www.kirkpatrickpartners.com

https://www.kirkpatrickpartners.com/Portals/0/Storage/New%20world%20level%202%207%2010.pdf

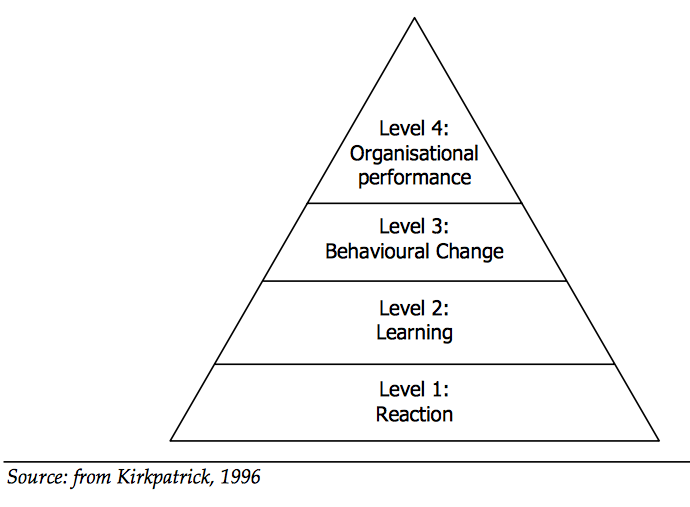

One of the most known and used learning tools is the Kirkpatrick Model. It provides a complex manner of evaluating trainings, looking not only at participants’ satisfaction and knowledge/skills development, but also at behavioural change and organizational impact.

The model proposes four levels:

- Level 1: Reaction (How did participants react to the training?)

- Level 2: Learning (Did the participants reach the learning objectives? What did they learn? What competencies have they developed?)

- Level 3: Behaviour/Transfer (How are they using what they learned in training within their workplace? What behaviour has changed? What is the improved performance?)

- Level 4: Organizational performance/ Results (What is the impact of the behaviour change on the organization?)

Harnessing all these levels ensures a full, complex, and meaningful evaluation. It does, however, often involve the engagement of more resources (i.e. time or money) as the model levels increase. Generally, training providers can go up to level three and still focus more on the participants’ self-perception. It is mostly up to the client to decide if and how they want to evaluate further. It could be a good idea and a proof of professionalism to discuss it with your clients. Decide together how deep and wide the training evaluation will be, what the relevant levels are to look into, which methods should be used, and what resources need to be invested.

Useful tip

The model can also be successfully used as an instrument of analysis and training design starting from the top down with the following guiding questions:

- Level 4: Results/ Organizational performance (What changes do we need at the organizational level?

- Level 3: Behaviour (What behaviour/ improved performance of the employees will support or determine the organizational performance defined?)

- Level 2: Learning (What do employees need to learn in order to be able to perform in a way that is required? How do they need to learn it?)

- Level 1: Reaction (How do participants need to feel during the learning, how should they react in order to ensure the defined learning outcomes.

More information about Levels 1 through 4

Level 1: Reaction

During reaction, you monitor and evaluate how people have felt in your training, and what their reactions to the learning activities, group dynamics, and training approach were. You look into the degree to which participants find the training favourable, engaging and relevant to their jobs.

As you might remember, adults learn best when they are relaxed and having fun, are curious and engaged in the learning process, and feel that the learning is relevant to them. Aim for these results.

You can monitor reaction on the spot, during the training, by asking particular questions either in plenary or in written short evaluation sheets, and also through discussions in reflection groups and then reporting back to plenary, etc. You should also evaluate reaction in your final training evaluation, whether on the spot or with online evaluation forms.

Level 2: Learning

During the learning level, you monitor and evaluate if participants reached the learning objectives, the degree to which participants acquired the intended knowledge, skills, and attitudes, as well as their confidence and commitment based on their participation in the training. This may be done through pre-training and post-training examinations that take the form of case-studies, simulations, and practical exercises. At the same time, an important component is the self-perceived increase of competencies in relation to the training objectives from the participants’ view.

Level 3: Behaviour/Transfer

During the behaviour level, you monitor and evaluate the actual behaviour change of participants as a result of the training experience and the degree to which participants apply what they learned during training when they are back on the job. This is a more complex evaluation stage, which is highly dependent on your clients, because it usually happens after people have returned to their jobs. So it is important to discuss with your clients how interested they are in this level of evaluation and how many resources they are willing to invest in it.

Still, part of this evaluation phase is the participants’ self-perception, and that is information you can easily collect and analyze as a trainer provider. You can monitor and evaluate people’s understandings and ideas for how to transfer the learning outcomes from the training to their workplace (during the training), as well as the extent to which they feel and think their behaviour has changed as a result of the training (immediately or sometime after the training).

Level 4: Results/Organizational Performance

On this level, what is assessed is the actual impact of the training on the whole company or organization. Evaluation on this level is generally conducted by the client itself and looks at a whole training or learning strategy.

You can, however, understand how the participants think their training will influence the organizational performance in achieving its business strategy. You can also invite participants to understand that their training is part of a bigger scheme and that they are accountable for learning and behaviour change that will lead to organizational change.

These are some questions you can use on the evaluation forms or in discussions with learners:

- What ultimate impact do you think you might contribute to the organization as you successfully apply what you learned?

- What do you anticipate will be the positive result of your efforts related to this training?

- I anticipate and am confident that I will eventually see positive results as a result of my efforts. (Choose from: strongly disagree, disagree, agree and strongly agree)

Self-perception questions to monitor/evaluate

Level 1: Reaction

You can use some of the following questions both as part of a questionnaire format, or as part of discussions and other participatory evaluation methods in order to monitor/evaluate the learners’ reaction to the training.

- Invite participants to evaluate each of the following aspects by using the scale: strongly disagree, disagree, agree or strongly agree:

- I was able to relate each of the learning objectives to the learning I achieved.

- I was appropriately challenged by the material.

- I found the course materials easy to navigate.

- I felt that the course materials will be essential for my success.

- I will be able to immediately apply what I learned.

- My learning was enhanced by the knowledge of the facilitator.

- My learning was enhanced by the experiences shared by the facilitator.

- I was well engaged during the session.

- It was easy for me to get actively involved during the session.

- I was comfortable with the pace of the program.

- I was comfortable with the duration of the session.

- I was given ample opportunity to get answers to my questions.

- I was given ample opportunity to practice the skills I was asked to learn.

- I was given ample opportunity to demonstrate my knowledge.

- I was given ample opportunity to demonstrate my skills.

- I felt refreshed after the breaks.

- I found the room atmosphere to be comfortable.

- I was pleased with the room set-up.

- I experienced minimal distractions during the session.

- I think this training was a useful use of my time.

- I think the training is relevant for my professional context.

- I think what I learned is practical and has a great potential of being applied in my job.

- Invite participants to answer open-ended questions:

- How would you describe the training?

- How would you describe your own participation in the training?

- How would you describe the group of participants?

- How would you describe the training team?

- How would you describe the methods used in the training?

- How relevant was this training for you?

- Invite participants to answer by rating questions (from 1 = very bad to 5 = very good), and offer the possibility for comments:

- My own learning process

- Logistics (training room, equipment, materials, breaks, timing, etc)

- Training structure

- Training content

- Training methods

- Group of participants

- The training team

- Relevance of the training to my current work context

Do you want to know more? Click here:

References

The New World Level 1 Reaction Sheets, Jim Kirkpatrick, PhD, www.kirkpatrickpartners.com

https://www.kirkpatrickpartners.com/Portals/0/Storage/The%20new%20world%20level%201%20reaction%20sheets.pdf

Level 2: Learning

You can use some of the following questions both as part of a questionnaire format, or as part of discussions and other participatory evaluation methods in order to monitor/evaluate the participants’ learning as a result of the sessions/training:

- Invite participants to answer scaling questions:

- I understood the objectives that were outlined during the course (Choose from: strongly disagree, disagree, agree and strongly agree)

- I think that the objectives of the session/training have been achieved (Choose from: strongly disagree, disagree, agree and strongly agree)

- To what degree are you confident that you will be able to apply what you learned in this course on the job? (Use a 5 point scale starting with 1 = not at all confident, to 5 = totally confident)

- To what degree are you committed to try to apply what you have learned? (Use a 5 point scale starting with 1 = not at all committed, to 5 = totally committed)

- Invite participants to answer open-ended questions:

- What were the three most important things you learned from this session/ training?

- What competencies do you feel you have developed in relation to the training topic?

- What knowledge/ skills/ attitudes do you feel you have acquired in relation to the training topic?

- Which were the most relevant training sessions/moments for you? Please tell us why.

- What would you change in the training to make it more useful?

- Invite participants to evaluate their competencies after the training: provide a list of knowledge, skills, behaviours, and attitudes and invite people to rate them according to the following possible scales:

- 1 = Not at all Competent; 2 = Little Competence; 3 = Moderately Competent; 4 = Fairly Competent; 5 = Very Competent; N/A = This competency is not applicable to my job

OR

-

- 1 = No knowledge/skill; 2 = A little knowledge/skill but considerable development required; 3 = Some knowledge/skill but development required; 4 = Good level of knowledge/skill displayed, with a little development required; 5 = Fully knowledgeable/skilled – no/very little development required; N/A = This competency is not applicable to my job

- Invite people to share additional comments if they wish.

- Note: It is useful if you apply these same questions on competency evaluations before the training (possibly as part of the Learning needs assessment phase[1] ) and compare the pre-training and post-training results to understand the impact of the training on learning.

Do you want to know more? Click here:

https://www.kirkpatrickpartners.com/Portals/0/Storage/New%20world%20level%202%207%2010.pdf

References

New World Level 2: The Importance of Learner Confidence and Commitment, Jim Kirkpatrick, PhD and Wendy Kayser Kirkpatrick, www.kirkpatrickpartners.com

https://www.kirkpatrickpartners.com/Portals/0/Storage/New%20world%20level%202%207%2010.pdf

Level 3: Behaviour/Transfer

You can use some of the following questions as part of a questionnaire format, or as part of discussions and other participatory evaluation methods in order to monitor/evaluate the participants’ planned or existing behaviour change as a result of the training:

- Evaluating people’s understandings and plans on how to transfer and implement the learning outcomes from the training to their workplace (during the training)

- Invite participants to evaluate each of the following aspects by using the scale: strongly disagree, disagree, agree and strongly agree:

- I am clear about what is expected of me as a result of going through this training.

- I will be able to apply on the job what I learned during this session/training.

- I do not anticipate any barriers to applying what I learned.

- Open-ended questions:

- From what you learned, what do you plan to apply back at your job?

- What kind of help might you need to apply what you learned?

- What barriers do you anticipate you might encounter as you attempt to put these new skills into practice?

- What ideas do you have for overcoming the barriers you mentioned?

- How would you hope to change your practice after this training?

- Evaluating the extent to which people feel and think their behaviour has changed as a result of the training (questions to be addressed and answered after the training)

- How did your practice change as a result of the training you took part in (name the training, dates, etc)?

- What has proven to be most useful from the training, now, after one week/month, six months, etc?

- What are you using the most that you have learned within the training?

- How have you applied the knowledge/skills/attitudes acquired within the training in your current workplace?

- What are you using from what you have learned within the training?

- How did you use that knowledge/skill/attitude?

- What are you so satisfied with related to the topic that you would continue doing?

- What do you feel you still need to develop?

- What do you foresee using in the future from what we have learned within the training?

- What would you change in the training to make it more useful?

Food for thought!

- What kind of monitoring and evaluation methods do you usually use?

- Which levels (from the Kirkpatrick Model) do you usually conduct the monitoring and evaluation on?

- What other methods/levels could you integrate in your training? What would be the benefit?

- What would a relevant evaluation form/questionnaire look like for your next training?

3. Tools/ After action review

When you pilot a new learning activity you have designed or deliver a training, it is crucial to elaborate on an evaluation strategy that allows you to assess and adjust content, methodologies and learning processes after each session. Always build regular evaluations into your design, so you obtain data while content and methodologies are still fresh in the participants’ minds.

If you work in a team, also ensure moments for ongoing evaluations by the facilitators, experts and staff through regular de-briefing. This will allow you to adjust the programme according to the team observations and participant feedback.

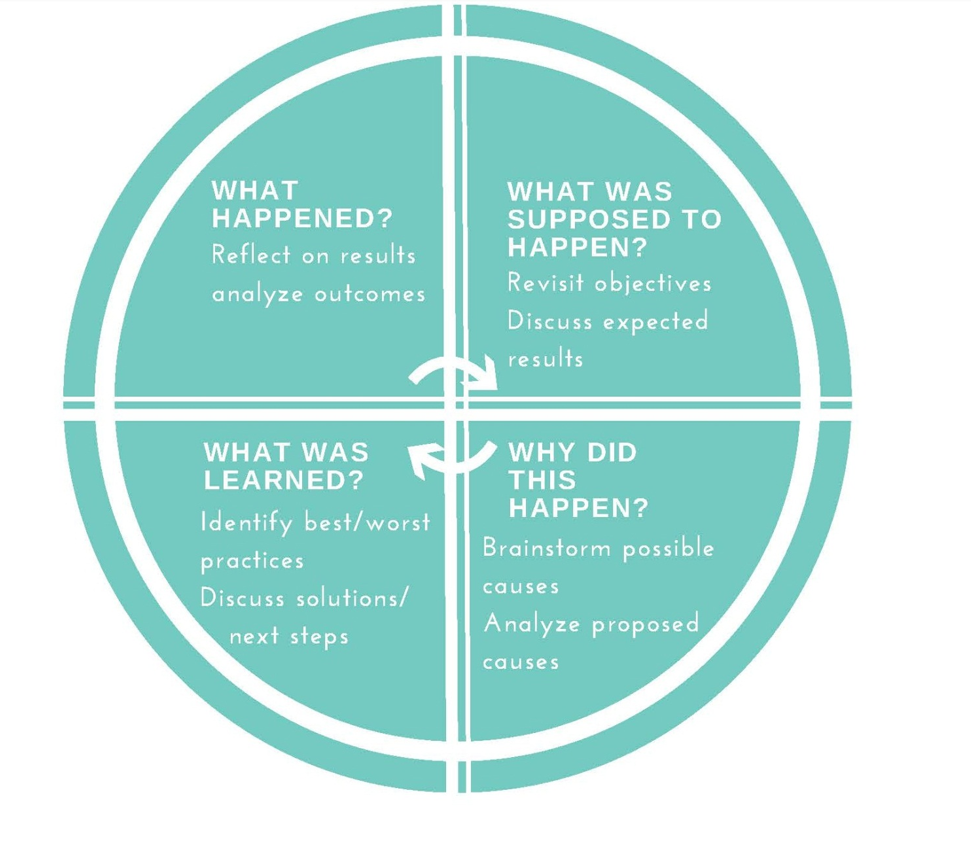

After Action Review (AAR) is a fast and simple tool to examine the results of a session, module or entire activity. It can be used both with the leaners as well as by the team. The AAR fosters learning-focused discussions to assess what happened, why and what to do differently.

Discuss the different elements as shown in the graphic below, asking open-ended questions.

Do you want to use online platforms and tools for monitoring and evaluation? Check out some examples here.